What is Literature Quality Assessment?

Literature quality assessment, also known as literature review quality assessment, refers to the process of critically evaluating the credibility, reliability, and relevance of existing literature on a particular topic or research question. It involves assessing the quality of scholarly sources to determine their suitability for inclusion in a systematic literature review or research study. Best dissertation writers is a platform that provides you with a list of proficient dissertation writers who can help you conduct a successful literature review, including literature quality assessment using different tools.

Key aspects of literature quality assessment include:

- Relevance: Assessing the relevance of literature to the research question or topic under investigation. Relevant literature should directly address the research problem and provide valuable insights or evidence related to the study’s objectives.

- Credibility: Evaluating the credibility of sources based on the authority and expertise of the authors, the reputation of the publication venue, and the rigor of the research methods employed. Credible sources are typically peer-reviewed, published in reputable journals or academic presses, and authored by experts in the field.

- Validity and Reliability: Examining the validity and reliability of research findings presented in the literature. Valid studies use appropriate research designs and methodologies to ensure the accuracy and generalizability of their results, while reliable studies produce consistent and replicable findings.

- Currency: Considering the currency of literature to ensure that the most recent and up-to-date research is included in the literature review. Depending on the field of study, it may be important to prioritize recent publications over older ones to capture the latest developments and insights in the field.

- Bias and Objectivity: Assessing the presence of bias or potential conflicts of interest in the literature. It’s important to critically evaluate the objectivity of sources and consider any potential biases that may influence the interpretation or presentation of research findings.

- Scope and Depth: Evaluating the scope and depth of coverage provided by literature sources. High-quality literature should offer comprehensive coverage of the topic, addressing key concepts, theories, and debates, and providing detailed analysis and discussion.

Literature Quality Assessment Tools

Critical Appraisal Skills Programme (CASP)

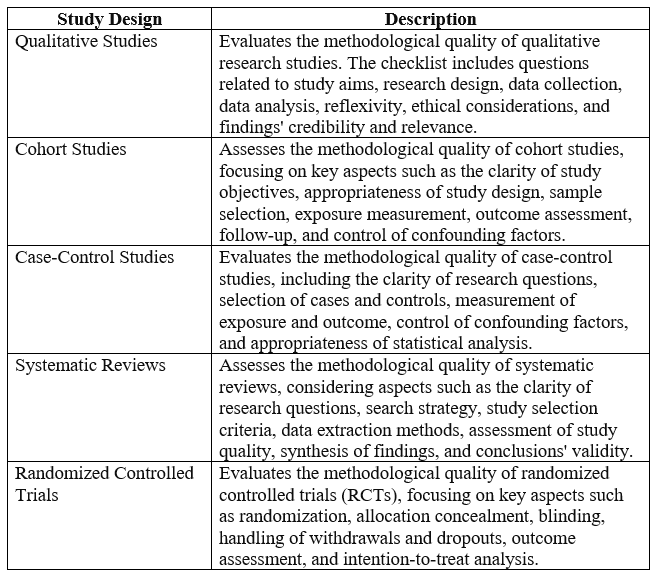

The Critical Appraisal Skills Programme (CASP) provides a series of tools designed to assist researchers, healthcare practitioners, and students in critically appraising research evidence or in literature quality assessment across various study designs. Each CASP tool is tailored to a specific study type or research methodology, allowing users to assess the quality and trustworthiness of individual studies or systematic reviews. Here’s a detailed description of each CASP tool for the literature quality assessment:

- CASP Qualitative Checklist – Is used in the quality assessment of qualitative research studies. It consists of ten questions covering key aspects of qualitative research, including the clarity of research aims, appropriateness of study design, data collection methods, data analysis techniques, reflexivity, and relevance of findings to practice or policy.

- CASP Systematic Review Checklist – Is used in the quality assessment of systematic reviews and meta-analyses. It includes ten questions focusing on the clarity of review aims, adequacy of search methods, inclusion criteria, study selection process, assessment of study quality, synthesis of findings, and implications for practice or policy.

- CASP Randomized Controlled Trial (RCT) Checklist – Is used in the quality assessment of randomized controlled trials. It consists of eleven questions covering key aspects of RCT design and conduct, including randomization, allocation concealment, blinding, completeness of follow-up, intention-to-treat analysis, and generalizability of findings.

- CASP Cohort Study Checklist – Is used in the quality assessment of cohort studies. It includes twelve questions focusing on key aspects of cohort study design, including the selection of study participants, measurement of exposure and outcome variables, control of confounding factors, follow-up procedures, and validity of study findings.

- CASP Case-Control Study Checklist – Is used in the quality assessment of case-control studies. It consists of eleven questions covering key aspects of case-control study design, including the selection of cases and controls, measurement of exposure and outcome variables, control of confounding factors, and validity of study findings.

- CASP Economic Evaluation Checklist – Is used in the quality assessment of economic evaluations, such as cost-effectiveness analyses or cost-benefit analyses. It includes twelve questions focusing on key aspects of economic evaluation methodology, including the identification of relevant costs and outcomes, measurement and valuation of resources, analysis of uncertainty, and interpretation of findings.

Below is an example of CASP tool for assessing the methodological quality and risk of bias in systematic review and meta-analyses:

Each checklist includes specific questions tailored to the respective study design, allowing researchers to systematically evaluate the methodological strengths and limitations of different types of studies included in nursing systematic literature reviews. These tools help ensure the reliability and validity of study findings and inform the overall quality assessment of the evidence base in nursing research.

Newcastle-Ottawa Scale (NOS)

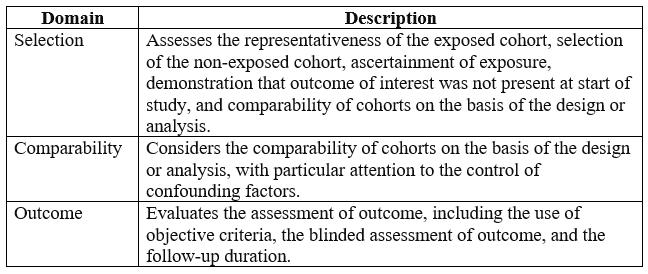

The Newcastle-Ottawa Scale (NOS) is a widely used tool for literature quality assessment of non-randomized studies in meta-analyses and systematic reviews. It was developed to evaluate the methodological quality and risk of bias in observational studies, such as cohort studies, case-control studies, and cross-sectional studies. The NOS assesses studies based on three main domains: selection of study groups, comparability of groups, and ascertainment of outcomes or exposure. Here’s a detailed description of the NOS tool:

- Selection of Study Groups – This domain evaluates the methodological quality of the selection process for study participants or groups. It includes criteria such as the representativeness of the exposed cohort (for cohort studies) or the selection of cases and controls (for case-control studies). Studies are assessed based on the adequacy of the definition and ascertainment of cases, the representativeness of the non-exposed cohort or controls, and the selection of participants without the outcome of interest.

- Comparability of Groups – This domain assesses the comparability of study groups or cohorts on key characteristics or potential confounding factors. For cohort and case-control studies, this includes the adequacy of control for confounding variables such as age, sex, comorbidities, and other relevant factors. Studies are evaluated based on the methods used to control for confounding, such as matching, stratification, or statistical adjustment.

- Ascertainment of Outcomes or Exposure – This domain evaluates the methods used to ascertain outcomes or exposure variables in the study. It includes criteria such as the validity and reliability of outcome assessment tools, the methods used to measure exposure variables, and the completeness of follow-up. Studies are assessed based on the adequacy of outcome ascertainment, the reliability of outcome measurement, and the completeness of follow-up for cohort studies.

Below is an example of the NOS for assessing the methodological quality and risk of bias in your nursing literature:

Each domain is assessed for the risk of bias (high, moderate, or low) based on the information provided in the study. This scale helps researchers systematically evaluate the methodological quality of non-randomized studies and identify potential sources of bias that may affect the validity of study findings in nursing research.

Cochrane Risk of Bias (RoB) Tool

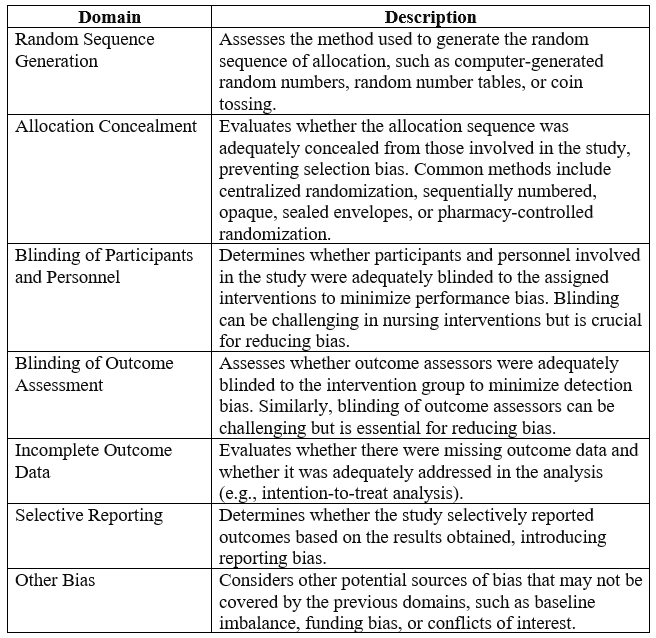

The Cochrane Risk of Bias (RoB) Tool is a widely used literature quality assessment tool in systematic reviews and meta-analyses, particularly in healthcare research. Developed by the Cochrane Collaboration, it aims to assess the risk of bias in individual studies included in systematic reviews by evaluating various aspects of study design and conduct. The tool is structured around six key domains, each representing a potential source of bias:

- Selection Bias – This domain evaluates the adequacy of random sequence generation and allocation concealment to determine whether participants in the study were allocated to intervention groups in an unbiased manner. Studies with adequate randomization methods and concealment of allocation are considered to have low risk of selection bias.

- Performance Bias – Performance bias assesses whether participants and personnel were adequately blinded to intervention allocation to prevent systematic differences between intervention and control groups. Studies with blinding of participants and personnel are considered to have low risk of performance bias.

- Detection Bias – Detection bias evaluates whether outcome assessors were adequately blinded to intervention allocation to prevent knowledge of the intervention influencing outcome assessment. Studies with blinding of outcome assessors are considered to have low risk of detection bias.

- Attrition Bias – Attrition bias examines whether there is incomplete outcome data due to participant dropout or loss to follow-up and evaluates whether missing data were handled appropriately. Studies with low rates of attrition and appropriate handling of missing data are considered to have low risk of attrition bias.

- Reporting Bias – Reporting bias assesses the likelihood of selective reporting of outcomes based on the presence or absence of pre-specified outcomes in study reports. Studies with complete and transparent reporting of all pre-specified outcomes are considered to have low risk of reporting bias.

- Other Sources of Bias – This domain considers other potential sources of bias not covered by the previous domains, such as baseline imbalance, conflicts of interest, or deviations from the intended intervention. Studies with minimal risk of bias from other sources are considered to have low overall risk of bias.

Below is an example of Cochrane Risk of Bias Tool for assessing the methodological quality and risk of bias in systematic literature:

Each domain of the Cochrane RoB Tool is assessed and scored based on predefined criteria, with judgments of low, high, or unclear risk of bias assigned accordingly. The tool provides a structured framework for assessing the methodological quality of individual studies and informing judgments about the overall risk of bias within a systematic review. By systematically evaluating the risk of bias across multiple domains, researchers can assess the strength of evidence and make more informed decisions about the reliability and validity of study findings.

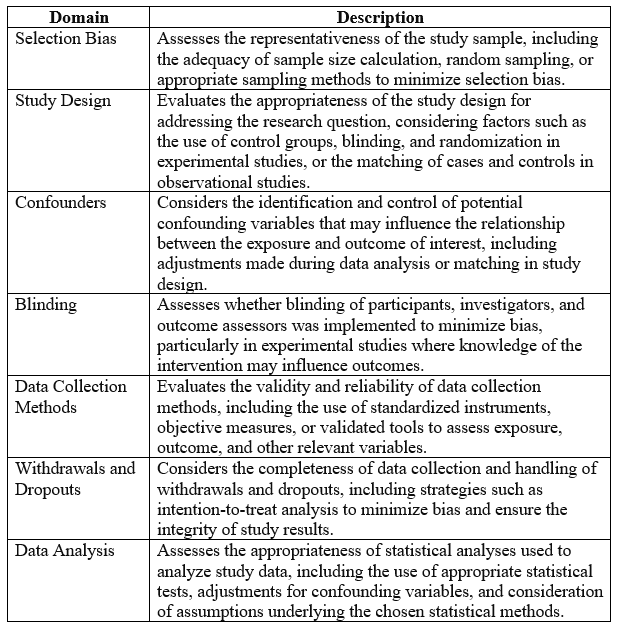

Quality Assessment Tool for Quantitative Studies

The Quality Assessment Tool for Quantitative Studies (QATQS) is a systematic tool designed to assess the methodological quality and risk of bias in quantitative research studies selected for the systematic reviews. Developed by the Effective Public Health Practice Project (EPHPP), the QATQS provides a standardized approach for evaluating the quality of various types of quantitative research, including randomized controlled trials (RCTs), cohort studies, case-control studies, and cross-sectional studies.

Here is a detailed description of the components and criteria included in the QATQS:

- Selection Bias – This component evaluates the adequacy of the selection process for study participants or groups. It includes criteria such as the representativeness of the study sample, the method of participant recruitment, and the response rate. Studies with representative and adequately recruited samples are considered to have low risk of selection bias.

- Study Design – This component assesses the appropriateness of the study design for addressing the research question. It considers factors such as the clarity and specificity of study objectives, the suitability of the study design for the research question, and the appropriateness of the comparison group (if applicable). Studies with clear objectives and appropriate study designs are considered to have low risk of bias.

- Confounding Variables – This component evaluates the control of confounding variables in the study design and analysis. It assesses whether potential confounders were identified and measured, whether appropriate methods were used to control for confounding, and whether confounders were accounted for in the analysis. Studies with adequate control of confounding variables are considered to have low risk of bias.

- Blinding – This component assesses whether blinding (or masking) of participants, personnel, and outcome assessors was implemented in the study. It considers factors such as the adequacy of blinding procedures, the likelihood of unmasking, and the reporting of blinding status. Studies with adequate blinding procedures are considered to have low risk of bias.

- Data Collection Methods – This component evaluates the reliability and validity of data collection methods used in the study. It assesses the clarity and appropriateness of data collection instruments, the training and qualifications of data collectors, and the reliability and validity of outcome measures. Studies with reliable and valid data collection methods are considered to have low risk of bias.

- Withdrawals and Dropouts – This component assesses the completeness of follow-up and the handling of withdrawals and dropouts in the study. It considers factors such as the rate of participant withdrawal or loss to follow-up, the reasons for withdrawal, and the methods used to account for missing data. Studies with minimal loss to follow-up and appropriate handling of withdrawals and dropouts are considered to have low risk of bias.

Below is an example of Quality Assessment Tool for Quantitative Studies (QATQS) for assessing the methodological quality and risk of bias in systematic literature:

Each domain is evaluated for the risk of bias (high, moderate, or low) based on the information provided in the study. This tool helps researchers systematically assess the methodological quality of quantitative research studies and identify potential sources of bias that may affect the validity of study findings in the selected literature.

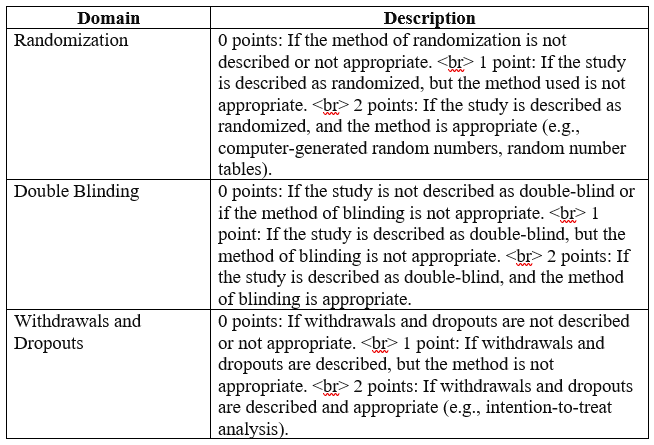

Jadad Scale

The Jadad Scale is a tool used to assess the methodological quality of clinical trials, particularly randomized controlled trials (RCTs). It was developed by Alejandro Jadad and colleagues in 1996 and is widely used in healthcare research to evaluate the quality of RCTs included in systematic reviews and meta-analyses. The Jadad Scale focuses on three key methodological components of clinical trials: randomization, blinding, and the handling of withdrawals and dropouts.

Here is a detailed description of each component:

Randomization (0-2 points) – This component assesses the method used for random sequence generation and allocation concealment. Studies are awarded:

- 2 points if the method of randomization is described and appropriate (e.g., computer-generated randomization, random number table) and if allocation concealment is adequate (e.g., central allocation, sealed envelopes).

- 1 point if the method of randomization is described but inadequate (e.g., alternation, case record number).

- 0 points if the method of randomization is not described or inappropriate.

Blinding (0-2 points) – This component assesses the method used for blinding of participants and personnel, as well as outcome assessors. Studies are awarded:

- 2 points if the method of blinding is described and appropriate for participants, personnel, and outcome assessors.

- 1 point if the method of blinding is described but inadequate for one of the three groups (participants, personnel, or outcome assessors).

- 0 points if the method of blinding is not described or inappropriate for any of the three groups.

Handling of Withdrawals and Dropouts (0-1 point) – This component assesses the reporting and handling of participant withdrawals and dropouts. Studies are awarded:

- 1 point if the number and reasons for withdrawals and dropouts are described, and if the analysis is conducted on an intention-to-treat basis or if withdrawals and dropouts are minimal and unlikely to affect the study results.

- 0 points if the number and reasons for withdrawals and dropouts are not described or if withdrawals and dropouts are not adequately addressed in the analysis.

Below is an example of Jadad Scale for assessing the methodological quality and risk of bias in your literature:

The total score ranges from 0 to 5, with higher scores indicating higher methodological quality. This scale helps researchers evaluate key aspects of RCTs, including randomization, blinding, and handling of withdrawals and dropouts, to assess the risk of bias and reliability of study findings in nursing research.

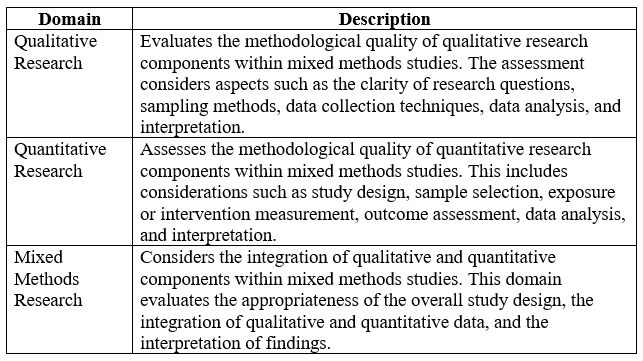

Mixed Methods Appraisal Tool (MMAT)

The Mixed Methods Appraisal Tool (MMAT) is a critical appraisal tool designed to assess the methodological quality of mixed methods studies. Developed by Hong, Pluye, and Bujold in 2016, the MMAT is widely used in research to evaluate the quality of studies that employ both qualitative and quantitative methods within a single research design. The MMAT provides a structured approach for appraising various types of mixed methods studies, including convergent, explanatory sequential, exploratory sequential, and embedded designs.

Here is a detailed description of the MMAT and its components:

Criteria for Inclusion – The MMAT begins by identifying the criteria for inclusion of studies in the appraisal process. These criteria typically include the type of research design, the clarity of the research question, the relevance of the study to the research topic, and the adequacy of reporting.

Methodological Quality Criteria – The MMAT assesses the methodological quality of mixed methods studies across five key criteria:

- Adequacy of Study Design: Evaluates whether the study design is appropriate for addressing the research question and objectives.

- Methodological Rigor: Assesses the rigor and credibility of the study methods, including the clarity of data collection procedures, the validity and reliability of measurement instruments, and the transparency of data analysis.

- Integration of Methods: Examines how effectively qualitative and quantitative data are integrated within the study, including the coherence and consistency of findings across methods.

- Appropriateness of Sampling: Considers whether the sampling strategy is appropriate for the research question and objectives, and whether the sample size is adequate to support the study’s conclusions.

- Data Analysis: Assesses the appropriateness and rigor of data analysis techniques used within the study, including the transparency of analytical procedures and the validity of interpretation.

Scoring System – Each criterion within the MMAT is typically scored on a binary scale (yes/no) to indicate whether the criterion is met or not met by the study. Alternatively, some versions of the MMAT may use a more nuanced scoring system, such as a three-point scale (high, medium, low).

Overall Appraisal – Once each criterion has been assessed, an overall appraisal of the methodological quality of the study is typically provided. This appraisal may involve summarizing the findings across criteria and providing an overall judgment of the study’s quality

Interpretation and Reporting – Finally, the MMAT provides guidance on how to interpret and report the results of the critical appraisal process. This may include recommendations for incorporating the findings into research syntheses, systematic reviews, or meta-analyses.

Below is an example of the Mixed Methods Appraisal Tool (MMAT) for assessing the methodological quality and risk of bias in your literature review.

Generally, the Mixed Methods Appraisal Tool provides a comprehensive framework for assessing the methodological quality of mixed methods studies, helping researchers make informed judgments about the credibility and validity of study findings.

Summary

These tools provide structured frameworks for literature quality assessment across various study designs and methodologies, empowering you to critically appraise individual studies and make informed decisions about their inclusion in your systematic literature review. It is always recommendable to use the same tool to assess quality of studies you have included in your systematic literature review.